Notes

Quick recap

In this series so far, we installed Ollama and LM Studio to build a local AI agent environment, and ran mastra's sample code.

This time, we will build an AI agent that performs web searches and displays results using mastra's Agent and Tools features. In this article, we will implement a web search tool that the LLM can call and make sure the LLM recognizes and invokes it correctly. The first implementation is naive, so it will not work well, but you will experience that just giving tools to an LLM does not magically solve everything.

Environment

- mastra 2025-10-14

Version

mastra is not yet a stable framework, so behavior may change when the version updates. If it doesn't work as described, check the version you are using.

What is an AI agent?

Before diving into implementation, let's confirm what an AI agent means. Here we quote and follow the definition from Practical Introduction to AI Agents for Real‑World Use (KS Information Science).

An AI agent is an intelligent system that performs tasks while interacting with an environment to achieve a goal. The environment is where the AI agent acts, such as digital spaces like web browsers and desktop apps, or physical spaces like a room. Early intelligence was expressed with logical symbols, then shifted to rule‑based and learning models, and now corresponds to LLMs. LLMs gain conversational ability, massive knowledge, few‑shot learning, language understanding, and logical reasoning during training. Modern AI agents leverage LLM capabilities to perceive the environment, recall past experiences from memory, plan toward goals, and act.

Tools

The definition of an AI agent includes "perform tasks while interacting with the environment". An LLM, the core of an AI agent, basically takes sequence data, calculates the probability of "the next token," and selects a likely one. It then appends that to the sequence and repeats, predicting the next token again.

LLMs themselves do not have the ability to act on the environment. So we need to provide capabilities that let the LLM affect the environment. Such capabilities are called tools, and they are programs executed outside the LLM. Tools are organized in the following paper.

Implementing tools in mastra

We will follow the official documentation for mastra tools. This time we implement a web search tool.

For those who want to see the whole picture first, here is the full tool implementation.

Web Search Tool Implementation

1import { createTool } from "@mastra/core";2import * as cheerio from "cheerio";3import { z } from "zod";45export const webSearchTool = createTool({6id: "web_search",7description: "Search the web for information",8inputSchema: z.object({9query: z.string().describe("The query to search the web for"),10}),11outputSchema: z.object({12results: z.array(13z.object({14title: z.string(),15url: z.string().optional(),16description: z.string().optional(),17}),18),19}),20execute: async ({ context }) => {21const response = await fetch("https://html.duckduckgo.com/html/", {22method: "POST",23headers: {24"Accept-Language": "ja;q=0.9",25"User-Agent":26"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/141.0.0.0 Safari/537.36",27},28body: new URLSearchParams({29q: context.query,30b: "",31}),32});3334const html = await response.text();35const $ = cheerio.load(html);3637const results = $(".result")38.map((_index, result) => {39const $title = $(result).find(".result__a");40const titleText = $title.text().trim();41const titleUrl = $title.attr("href");4243const $snippet = $(result).find(".result__snippet");44const snippetText = $snippet.text().trim();4546return {47title: titleText,48url: titleUrl,49description: snippetText,50};51})52.get();5354return {55results,56};57},58});

Let's first look at how to implement a tool in mastra.

Tool overview

In mastra, you implement tools with createTool, or manage an MCP (Model Context Protocol) server via MCPClient and pass it to an Agent so the LLM can use those tools.

We will explain MCP after the web search agent is complete.

Here, we'll implement a web search tool using createTool, entirely within mastra.

For details on createTool, see the API reference. Here is the quick usage.

We implement the tool in src/mastra/tools/web-search-tool.ts.

1export const webSearchTool = createTool({2id: "web_search",3description: "Search the web for information",4inputSchema: /* ... */,5outputSchema: /* ... */,6execute: async ({ context }) => {7// ...8},9});

The object passed to createTool takes the tool ID, description, input schema, output schema, and tool logic.

The id should clearly express what the tool does.

The description is used by the LLM to decide when and how to use the tool, so explain its usage clearly and succinctly.

Tool input and output are JSON.

inputSchema and outputSchema define the JSON schema for inputs and outputs.

We define schemas with Zod.

execute implements the tool logic. The context argument is the input parsed by inputSchema, so you can access tool inputs through it.

Now let's look at inputSchema, outputSchema, and execute in more detail.

Input schema

For the web search tool, we only need a search query as input. Since the input schema is a JSON object, it looks like this.

1export const webSearchTool = createTool({2// ...3inputSchema: z.object({4query: z.string().describe("The query to search the web for"),5}),6// ...7});

The description explains what value to set for each property.

Here we say "a query for web search." It's written in English, but a Japanese‑capable LLM could also handle Japanese.

However, with smaller local LLMs, you might see a clear accuracy gap between Japanese and English.

You might omit description if the property name is self‑explanatory.

Output schema

Next, define the output schema.

1export const webSearchTool = createTool({2// ...3outputSchema: z.object({4results: z.array(5z.object({6title: z.string(),7url: z.string().optional(),8description: z.string().optional(),9}),10),11}),12// ...13});

Here we assume DuckDuckGo results will yield three items: title, URL, and site description. So we return a list of objects with those properties in results.

Tool logic

Now for the web search logic. We will scrape DuckDuckGo's search results. Scraping Google Search is explicitly prohibited by its terms, so we do not use it. DuckDuckGo doesn't explicitly allow scraping either, but it also doesn't explicitly forbid it (author's investigation), so we'll implement it as an LLM tool at your own risk. This article does not recommend scraping DuckDuckGo. Keep in mind that duckduckgo.com and html.duckduckgo.com are disallowed in their robots.txt.

Also, if you just want a web search tool, Ollama provides a Web Search API, so you may prefer that.

DuckDuckGo

DuckDuckGo is a search engine that emphasizes privacy. Unlike Google, it does not track via cookies.

If you search for how to scrape DuckDuckGo, you will find many articles using a Python library. But we want to implement this in TypeScript, so we won't use that approach.

I can think of three ways to scrape DuckDuckGo:

- Use a headless browser like Playwright or Puppeteer

- Parse HTML returned by https://html.duckduckgo.com/html/ or https://lite.duckduckgo.com/lite/

- Parse the response (JavaScript) from https://duckduckgo.com/d.js

Some libraries use the third approach internally, likely because parsing d.js avoids scraping bans on the surface.

But it requires sending a dummy request to obtain a token before searching (probably).

That is a hassle, so we'll scrape https://html.duckduckgo.com/html/.

I later learned about https://lite.duckduckgo.com/lite/, which seems lighter, so if you implement this yourself, try the Lite version.

Again, scraping is at your own risk.

Let's implement it.

Implementation

When you check the network request for https://html.duckduckgo.com/html/ search, you can see the HTML version uses a POST request. After removing unnecessary fields, the search works with a request like this.

1$ curl 'https://html.duckduckgo.com/html/' \2-H 'accept-language: ja' \3-H 'user-agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/141.0.0.0 Safari/537.36' \4--data-raw 'q=test&b='

It seems to check whether the request is from a browser using User-Agent, so we can't remove that header.

Also, the search language is controlled by Accept-Language, so here we fix it to ja.

For practical use, it would be better to allow English too, so we should allow the LLM to set Accept-Language.

But to keep the explanation simple, we accept only q as the input (the search query) and ignore others.

The response from https://html.duckduckgo.com/html/ is HTML, so we parse it with cheerio and extract results.

With that in mind, the tool logic is as follows.

1export const webSearchTool = createTool({2// ...3execute: async ({ context }) => {4const response = await fetch("https://html.duckduckgo.com/html/", {5method: "POST",6headers: {7"Accept-Language": "ja",8"User-Agent":9"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/141.0.0.0 Safari/537.36",10},11body: new URLSearchParams({12q: context.query,Get query from context defined in inputSchema13b: "",14}),15});1617const html = await response.text();18const $ = cheerio.load(html);1920const results = $(".result")21.map((_index, result) => {22const $title = $(result).find(".result__a");23const titleText = $title.text().trim();24const titleUrl = $title.attr("href");2526const $snippet = $(result).find(".result__snippet");27const snippetText = $snippet.text().trim();2829return {30title: titleText,31url: titleUrl,32description: snippetText,33};34})35.get();3637return {38results,39};40},41});

The details of this logic are not essential for mastra usage or agent tools, so I won't explain it.

See the docs for fetch and cheerio if needed.

Now that we have the web search tool, let's implement the agent.

Web search agent

Now we connect the LLM and tools and define an AI agent.

Differences from the previous article

Before implementing, here are the differences from the previous article Do Local AI Agents Dream of Electric Sheep? (Environment Setup).

1import { createOpenAICompatible } from "@ai-sdk/openai-compatible";23export const lmstudio = createOpenAICompatible({4name: "lmstudio",5baseURL: "http://localhost:1234/v1",6apiKey: "lm-studio",7});89export const ollama = createOpenAICompatible({10name: "ollama",11baseURL: "http://localhost:11434/v1",12});

In the last two articles we wrote lmstudio and ollama in src/mastra/agents/weather-agent.ts, but we moved them to src/config/providers.ts because they are needed elsewhere.

Implementation

The Agent implementation looks like this.

1import { Memory } from "@mastra/memory";2import { ollama } from "../../config/providers";3import { LibSQLStore } from "@mastra/libsql";4import { Agent } from "@mastra/core/agent";5import { webSearchTool } from "../tools/web-search-tool";67export const webSearchAgent = new Agent({8name: "Web Search Agent",9instructions: `10You are a helpful web search assistant that can help users find information on the web.11When the user asks for information, you should use the webSearchTool to search the web for information.12`,13model: lmstudio("openai/gpt-oss-20b"),14tools: { webSearchTool },Pass in the webSearchTool15memory: new Memory({16storage: new LibSQLStore({17url: "file:../mastra.db", // path is relative to the .mastra/output directory18}),19}),20});

It's almost the same as the sample Weather Agent.

Here, instructions simply tells the agent to use webSearchTool to search and answer user queries.

We pass the tool in tools.

With this, the LLM can use webSearchTool, and instructions tells it when to use it,

so the LLM can decide the right timing and perform web searches.

Register the agent

Now that the Agent is implemented, add webSearchAgent to the Mastra instance in src/mastra/index.ts.

src/mastra/index.ts

Defining an Agent is not enough; it must be registered in mastra.

To use agents and workflows in mastra, register them in the Mastra instance exported as mastra in src/mastra/index.ts.

Specifically, make the following change.

1import { Mastra } from "@mastra/core/mastra";2import { PinoLogger } from "@mastra/loggers";3import { LibSQLStore } from "@mastra/libsql";4import { weatherWorkflow } from "./workflows/weather-workflow";5import { weatherAgent } from "./agents/weather-agent";6import { webSearchAgent } from "./agents/web-search-agent";78export const mastra = new Mastra({9workflows: { weatherWorkflow },10-agents: { weatherAgent },11+agents: { weatherAgent, webSearchAgent },Add webSearchAgent12storage: new LibSQLStore({13// stores observability, scores, ... into memory storage, if it needs to persist, change to file:../mastra.db14url: ":memory:",15}),16logger: new PinoLogger({17name: "Mastra",18level: "info",19}),20telemetry: {21// Telemetry is deprecated and will be removed in the Nov 4th release22enabled: false,23},24observability: {25// Enables DefaultExporter and CloudExporter for AI tracing26default: { enabled: true },27},28});

Try the web search agent

Start mastra with npm run dev, then open http://localhost:4111/agents in your browser.

You should see Web Search Agent in the list.

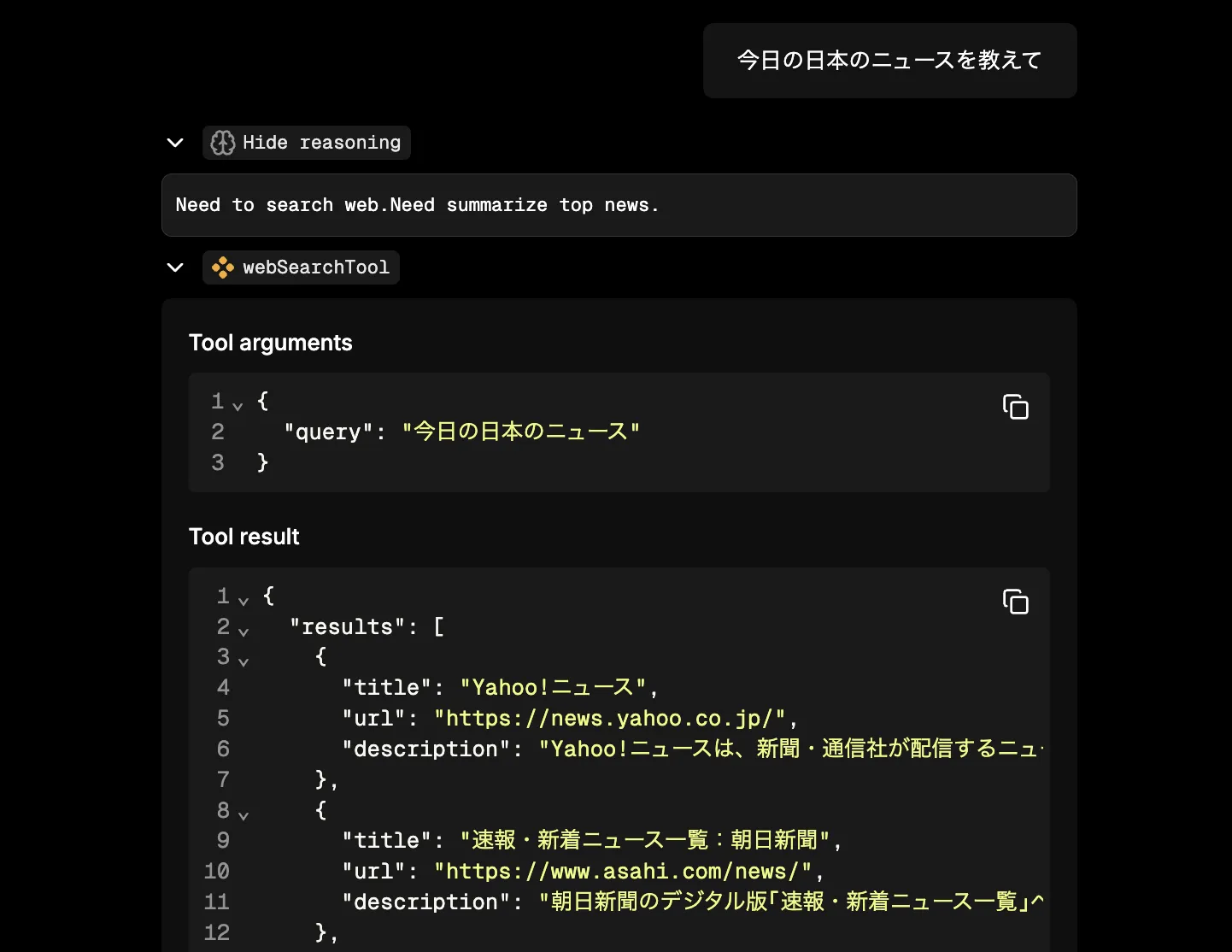

Select Web Search Agent and ask about today's news in the chat UI.

You can see that webSearchTool is called along with a plausible answer.

However, if you inspect the tool call, you'll see it's not being used the way you expect.

When humans search the web for today's news, we don't type "today's news in Japan" as a sentence. I hear that English speakers sometimes type full sentences into Google‑like search engines, but Japanese speakers usually search with keywords like "Japan news".

"Today's news in Japan" might yield decent results, but even when you ask other questions, the agent tends to pass parts of your prompt directly as a query.

This shows that simply giving the LLM a tool does not always make it use it appropriately. To improve it, you can instruct the agent about what to think before searching, or implement a separate agent that derives search keywords from the user prompt and use that reasoning for the search.

In the next article, we will try such improvements.

Closing

In this article, we implemented a tool that performs web search (DuckDuckGo) and made the LLM use it via mastra. As a result, we experienced that simply giving a web search tool to an LLM does not guarantee proper searches. In the next article, we will instruct the LLM on what to consider before searching and use workflows to search more systematically.

Once the LLM can search with proper keywords, the next phase is to load the pages that hit in search and implement tasks based on those results.